The VR Rehab Project

The main project that I've been working on is the VR Stroke rehab project. This is an ongoing project run by the psychology department at my alma mater, and I was recruited to handle the programming side of things. I got pretty lucky in being the right person at the right time, as the COVID pandemic meant that the university having a lower than average graduation rate for my specialization (there were only 2 total game dev graduates), and I had done a VR project for my end of degree capstone. This meant that when the psychology department asked around looking to hire a unity developer for a virtual reality project, I was the only name that came up.

Basically, one of the potential effects of a stroke (a bleed in the brain) is a loss of mobility on one side of the body. This is notable because all the muscles and stuff in the body are physically fine, but due to the damage to the brain a patient will need to essentially "relearn" how to move the limb. The effects of a stroke can manifest in different ways depending on the location of the bleed, but this project was specifically focused on the reduced range of motion in the upper body.

The VR element is based on an existing form of rehab called mirror therapy. An abridged explanation is that if you can see your arm moving while you're trying to move it, you form those neural connections faster. Traditionally this was done by covering a limb with a mirror and instructing the patient to try to lift both arms at the same time, though this means that your exercise options were limited in order to maintain the visual effect. What we are able to do in VR is apply a multiplier to the virtual movements of the weaker limb, meaning that in the headset it appears to move further than you physically move it.

I should take a moment and acknowledge the team that works on the VR project. Due to how the funding works, the amount of people working on it changes often, with students from the university being hired on seasonally for summer or school terms for a few months at a time. The core development team is myself, though depending on the time of year we have had up to 4 additional programmers and 3 additional honors students working on it in some capacity. Technically my job title is research assistant, but I've got the responsibilities of a lead programmer, project manager, and probably some other stuff I don't know the name for. At the time of writing, the team is myself and another psych student (the boss is on sabbatical) but as the school year starts back up I expect we'll pick up a few students to run experiments.

The VR amplification turned out to be a neat effect to play around with, and feels like a 3D version of when you increase the sensitivity of a mouse cursor. Within a few weeks I had a working version of the system and developed a calibration protocol so that the amplification would be customized to roughly match the patient's healthy range of motion. Aside from this, a fair amount of time was spent into the creation of tasks (mini-games) so the user had things to do with the amplification, as well as an assorted array of features that my boss thought would be useful over the course of working on this for a few years. This is where the bulk of my work ended up going, as some of these features were new, complex, and unusual.

Hand Tracking

The first thing I had to implement outside our original scope was hand tracking, meaning we used the headset to track finger positions rather than having the participant hold a controller. At the time, hand tracking had only just come out a few days after my hiring date, so it was sort of a "wild west" in terms of not having much information on how to get it working. To oversimplify how it works, each finger has a "curl" value representing how open/closed they are (0 being like a high-five, and a 1 being a closed fist). We were able to modify our amplification code to work with this, so we could also amplify the grips for patients who had difficulty opening/closing their hands.

VR Coach

Once we had that working, my boss wanted to implement a virtual avatar that would demonstrate the different tasks for the user, accompanied by a voice-over. This ended up being more of an extremely complicated request that continues to give me grief, so I had to break it down into different smaller features that were eventually combined to create the effect. Each of these could probably be their own lengthy post in a more code-oriented blog, but personally I don't find code specifics that interesting.

The first thing we had to do was figure out some way to record animations. I ended up writing code for a motion capture system using the headset that would record the VR positions for the hands/head, and with the help of some kinematic algorithms, move our 3D character around. We hit a road bump with this approach pretty quickly though: the hand tracking. You see, at the time we were working on this our only option for hand tracking was to use the official Oculus SDK for unity, so we were locked into the terrible development system meta had made. The most glaring issue was in how they designed their hand models: the system input for hand movements didn't make any fucking sense and refused to match nicely to any hand models we had, or could make in blender. It's no exaggeration that myself and the co-op students spent months trying to find or create a 3d hand that would match what the system was giving us, with no success. The solution I ended up implementing would be a sort of "translation" code that would convert each of the 32 hand tracking variables onto whatever model we were using, with hardcoded conversion values. Years later in development, a similar approach would be what we see implemented in official unity integrations from other developers, and from what I can tell is the standard practice when dealing with this SDK. I am quite pleased to have figured this out on my own.

The motion capture system we created had the advantage of being done entirely in engine, so the workflow for recording was to essentially just to press the record button and then perform the task as normal. This approach also meant that even for tasks where the participant was required to interact with other objects (like picking up a bottle) we were able to record the movements for the interacted objects as well. I left the bulk of the work of recording these animations to our summer co-op students, as we had a lot of tasks to process. Later on the lab would get a Qualysis motion capture system installed, similar to how professional motion capture is done for film, and we're working on switching over to that more high quality recording approach.

It is worth mentioning that at one point I had integrated a lip-sync system for the avatar, but removed that feature after users reported it being "creepy". Dropping this was fine with me, as it was one less thing I had to maintain.

Voice Commands

At one point, the team became concerned about a hypothetical user who had a stroke so severe that pushing a button would be too difficult. Despite this essentially meaning they would be unable to perform any of the tasks we had designed, the boss assigned me with coming up with a voice recognition menu, so users could just say what they wanted to do. To my credit, it wasn't that hard to implement, and I learned a lot about the process, and like other aspects of the project, I went a little overboard. The gist of it is that we used a third party service to handle the interpretation of the voice to text, which would return an assigned "intent". This meant that we could trigger certain events even if they didn't explicitly say the keyword they wanted. for example after the terrifying tutorial avatar finishes explaining how to lift a block, we ask the participant if they want to play the task now or repeat the instructions. If they didn't say the keyword to continue, we could still have the program trigger the response if they said something like "I don't want to restart, I want to do the other thing". Voice commands were recently was disabled due to the privacy concerns with meta, but I thought it was a neat little feature.

Eye Tracking

At some point, the lab got additional funding which we were forced to spend on equipment instead of on extending my employment, so the boss purchased this headset with eye tracking integrated into it. Getting that working in unity was pretty easy, thanks to the sizeable community that had been using these types of systems for VR Chat avatars. I don't have a lot to say on the implementation other than we did collect some data in a study doing it, and that it was pretty neat. An implementation we're doing right now is an aim-assistance task where a user throws a ball, but depending on where they are looking at, we "adjust" their throw to head to that point, while still appearing to match their attempted throw.

Emailed Reports

Once the project started to be more stable, my boss asked me to implement a way to send reports out from the headset without having to hook up to a desktop. I ended up wiring an email account into the unity code, so that I could send user generated reports out via email. This probably could have been done differently, but I was quite pleased to have gotten it working. I could see this being used for like, an error reporting command in a demo or something down the road.

Sequence Scheduler

The project has changed in scope of number of times, particuarly regarding what a users play session would look like. Originally the plan was for the user to pick the tasks they wanted to do via a menu or some interactive room environment, but over time that shifted into a pre-set sequence of tasks to ensure the user performed specific movements. This wasn't too hard to implement, really it was just a list of scene names. I did add a time-gate function where a user could have a week long que of tasks, but only be able to access a new sequence once the previous one was completed, and 24 hours had passed.

The tricky part, which I am working on currently, is to have a seperate application that a clinician could use to design one of these sequences and deploy them to the headset wirelessly. I've got a few ideas on how I might go about approaching this, but I've had difficulty with getting files onto/off of the headset. This is a work in progress, so I'll probably save this for a future blogpost once I've gotten it working.

Mini Games / Tasks

I've mentioned the tasks in passing a few times, but haven't really explained what I meant by that. This is partially because the bulk of them were the summer projects of the temporary co-op students we had and not things I made, though later adjustments to these were often requested or the students left the tasks finished at the end of their term, so I was considerably involved with all of them.

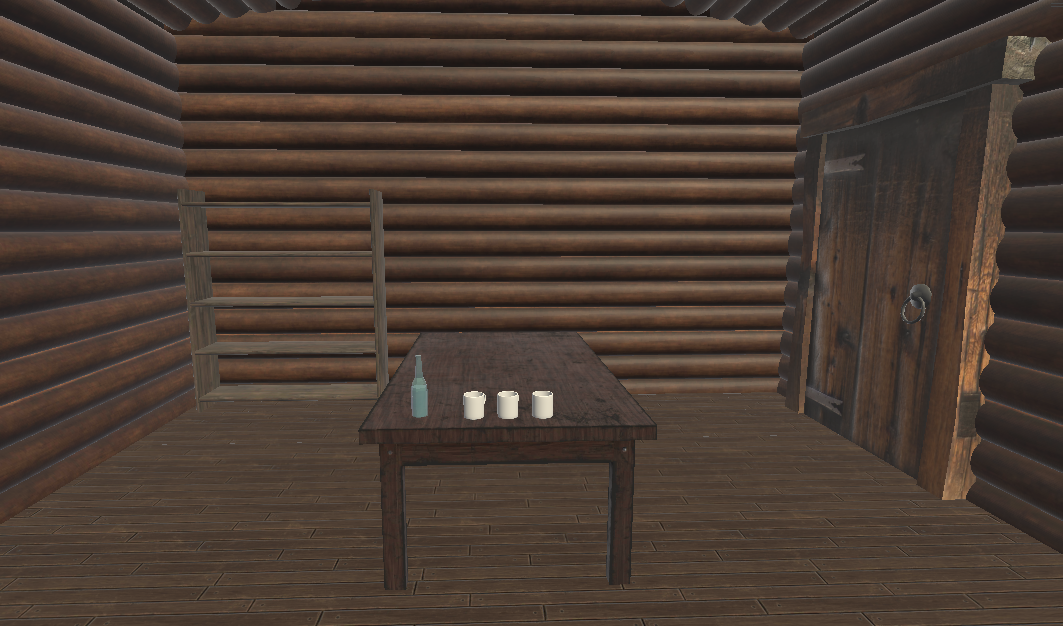

As part of the core experiment we were performing, we were curious about the performance between a gamified task only possible in VR, and a realistic task meant to emulate a real rehabilitation exercise. In total, we have developed twenty different tasks, with some of the more interesting tasks being a 3D adaptation of the game "snake" and a carnival style wire-buzzer game. Most of the tasks were very simple, and just existed as an excuse to get a person to perform repetitive movements, like stacking blocks or petting a dog.

Aside from the classification of game/non-game, we tried to separate the tasks further into different types of movements and difficulties. The categories we had were reaching tasks where a user just has to move their arm, grasping tasks where a user just needs to focus on opening or closing their hand, and reach and grasp "precision tasks" where a user had to do both. How we set the difficulty for a task varied based on the specifics for the activity. For example, in a block stacking game we make the blocks bigger as the difficulty increases (remember this is a rehabilitation program, so while a bigger block tower becomes easier to balance, the height they have to reach upwards increases). My personal favourite that I worked on is the bottle pour activity because I wrote incredibly overengineered code to emulate how the pouring of a liquid from a bottle (ie, the amount you have to tilt the bottle increases as it is emptied).